Deep Learning and ART HISTORY

“The transformation of culture to data requires a process of translation, interpretation, and representation, which is often underemphasized in quantitative cultural analysis.” Amanda Wasielewski, Computational Formalism: Art History and Machine Learning

Computational processing—generative artificial intelligence, machine learning, analytics—in the digital humanities is a hotly debated topic. If the humanities operate as a series of repositories of societal self-image and governance, what happens when the outputs are reduced to algorithms, tokens, and pixels? Is it possible to operationalize, quantify, dissect, and configure (or reconfigure) human creativity and thought?

In reality, data analysis and its underpinning technology been deployed by academic researchers for years. R programming and LiDAR assist archaeologists with large-scale topographical and and dig site insights; stylometry models coded in Python assist literature scholars with author attribution. However, application of these kinds of computational tools is inconsistent: Dr. Leonardo Impett and Dr. Fabian Offert note that branches of the humanities that deal with visual outputs suffer from “the Laocoön problem of computation,” specifically the difficulty of wrestling, like Trojan Laocoön, with the entangled twin snakes of text and image.

As a postgraduate art history researcher in Southeast Asian art and visual culture, I regularly encounter a different set of entanglements related to cultural relevance and accessibility. Western institutions are interested in western European fine arts, and scholarly attentions—and funding—flow towards those priorities; colonial and post-colonial collectors and institutions have acquired and buried artworks from the Global South; the effects of war, climate change, and political regimes are destructive. Preservation and display of these artifacts would be optimal, but images of these works and the facts of their existence (their metadata, if you will) are being lost too. At the same time, the AI and machine learning applications currently developed for art history are built for and trained on western European fine art. Entrenching a small set of artworks is limiting the use of AI and ML for researchers, historians, teachers, institutions, museums and curators, art theft and trafficking prevention, and municipal/public-sector cultural heritage organizations who want or need to work with different datasets.

Researching Art’s Geo-temporal Attributes

Solving for parity in art history is a lifetime’s work, but leveraging computational processing techniques to solve defined problems in under-examined, under-funded, and under-represented artistic traditions is very doable. One such problem is related to contextualization; I may be able to describe the colors and lines and composition of an artwork (the formalist approach), but I lack the knowledge of where or when the work was created. My envisioned solution is a machine learning for Researching Art’s Geo-temporal Attributes (RAGA), a multimodal model that integrates text and image datasets to create visual lineages of non-European artworks based on their production attributes, over time or across geographic distance. RAGA answers the where and when to assist researchers in answering the who, where, or why.

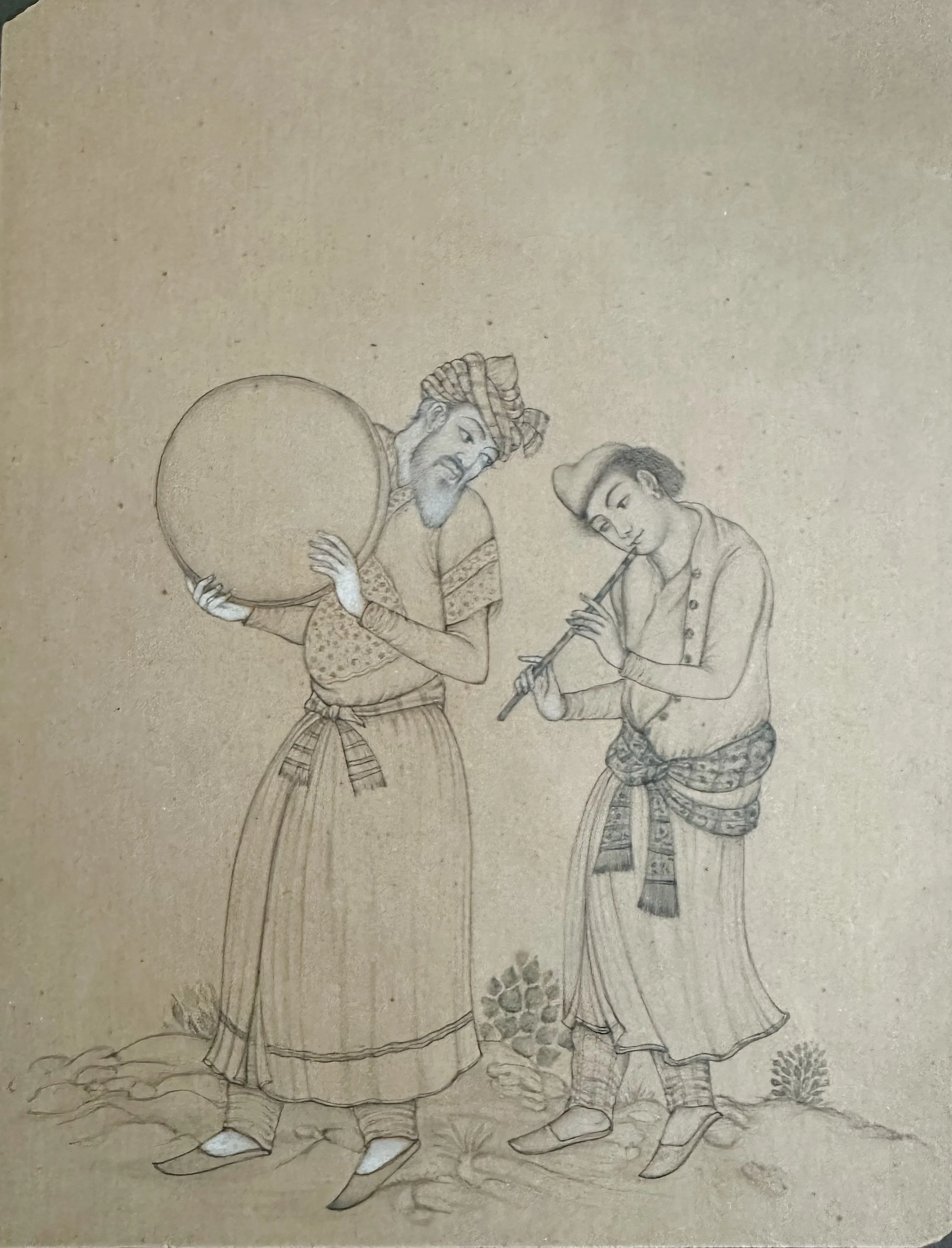

Consider the sketch above; you can identify that the figures are musicians and the medium is pencil and white chalk on paper and you might guess that the work was created in Asia. RAGA can also supply the insight that the drawing is Iranian, probably late nineteenth or early twentieth century and show you similar drawings.